Introduction To Replicate

While looking at different platforms to run stable diffusion models, I came across Replicate. While Stability AI does provide you an API to do inference against stable diffusion models, Replicate allows you to run your own custom models along with open source models. Also it has much bigger community of open source models.

In this tutorial we will look at how we can run simple image and video generation models using Replicate.

Setup

- First you need to go to https://replicate.com/ and sign in using your github account.

- Then create your API token on tokens page.

- Copy this token to secrets section with key as REPLICATE_API_TOKEN and value as token’s value

- Setup this token value as environment variable as showed below

import os

from google.colab import userdata

api_token = userdata.get('REPLICATE_API_TOKEN')

os.environ["REPLICATE_API_TOKEN"] = api_tokenInstallation

We need to install Replicate python API client to access their environment and also have access to their abstraction APIs over ML models.

!pip install replicateRunning The Model

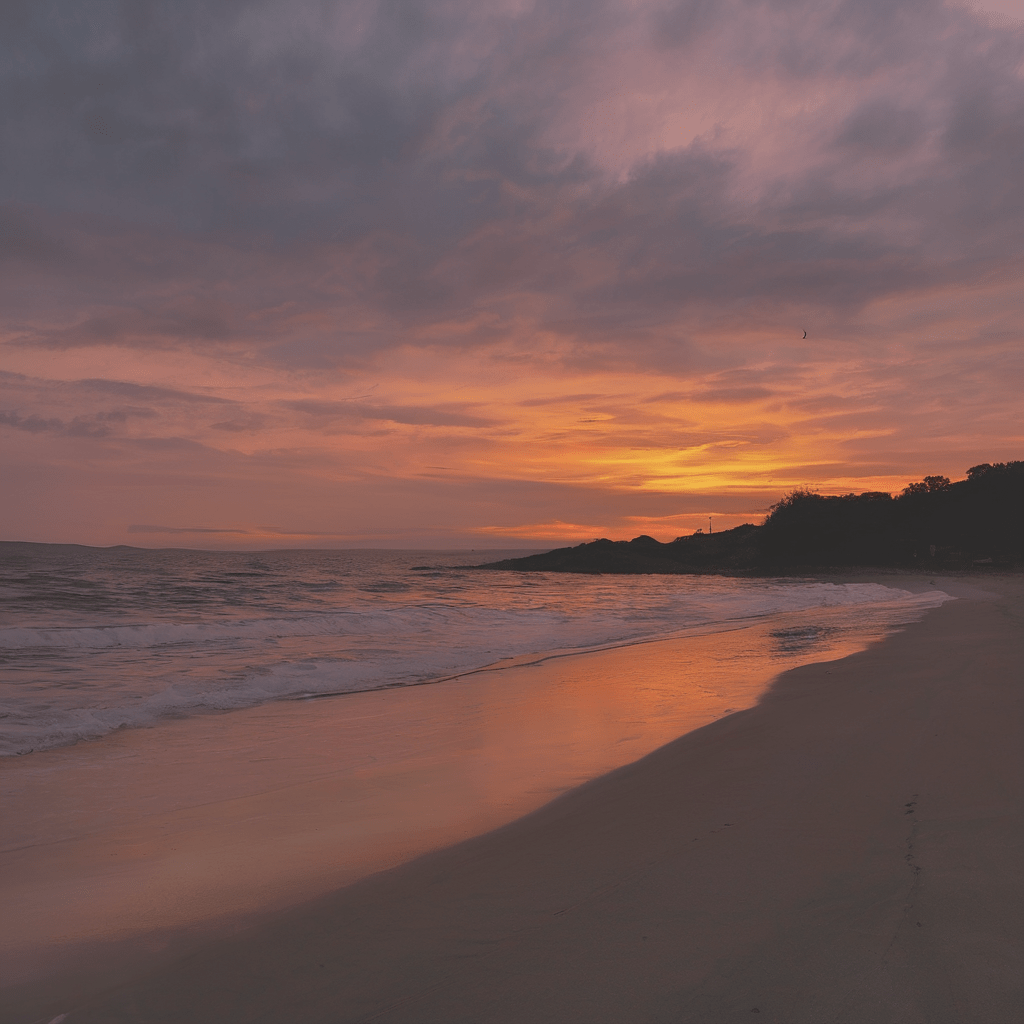

Let’s run stable diffusion SDXL model for image generation. We use replicate.run method to provide prompt to the model. Following 7 lines of code run the model and produces the image output.

import replicate

output = replicate.run(

"stability-ai/sdxl:39ed52f2a78e934b3ba6e2a89f5b1c712de7dfea535525255b1aa35c5565e08b",

input={"prompt": "a sunset on the beach"}

)

from IPython.display import Image

Image(url=output[0])

Pretty straightforward, right?

Running Stable Video Generation Model

Ever since Stability AI released Stable Video Diffusion, I wanted to try that out. Replicate makes it extremely easy to run this model and results are spectacular. Keep in mind this model is not yet ready for commercial use.

from urllib.request import urlretrieve

model = replicate.models.get("stability-ai/stable-video-diffusion")

version = model.versions.get("3f0457e4619daac51203dedb472816fd4af51f3149fa7a9e0b5ffcf1b8172438")

prediction = replicate.predictions.create(

version=version,

input={

"cond_aug": 0.02,

"decoding_t": 7,

"input_image": "https://raw.githubusercontent.com/rohitrmd/replicate-introduction/main/astronaut.jpg",

"video_length": "14_frames_with_svd",

"sizing_strategy": "maintain_aspect_ratio",

"motion_bucket_id": 127,

"frames_per_second": 6

})

prediction.wait()

Tracking The Prediction Status

As image/audio/video generation models take long time to generate output, replicate provides an easy way to track progress of the inference. Status can have values as started, processing, succeeded or failed. Let’s verify status of our prediction.

prediction.status

‘succeeded’

View The Video Output

Let’s verify the video output produced by the model.

urlretrieve(prediction.output, "/tmp/out.mp4")

from IPython.display import Video

Video(prediction.output)That just looks awesome. It’s very easy to get started with Replicate and try out your favorite models. You can even try LLM models on their platform. So keep coding and keep learning. Peace out!

You can find the above code example on my GitHub along with the Google Colab Link